Praetorian : Around Space

Spatializing an amplified music band's live show

Praetorian is a french metal band founded in 2007 and composed of four members : a singer, a guitarist, a bassist and a drummer. They are influenced by Rammstein, Metallica, Avenged Sevenfold, Clawfinger, Mass Hysteria and Lofofora, among others.

In 2022, they initiated a musical research project, codename Praetorian : Around Space, in collaboration with the SCRIME. It originally consisted in spatializing multitrack versions of a selection of songs from their album Furialis.

In 2024, they proposed me to work with them on the spatialization of a live performance, which I accepted, and we started brainstorming on this crazy idea.

The band uses an in-ear click track on stage to always keep synchronized with a sample track, so it was easy to write all the spatialization in advance and play it back in sync with the music during the performance. We just had to find a way to write all sound trajectories for all 7 tracks from the 9 songs we planned to show in public.

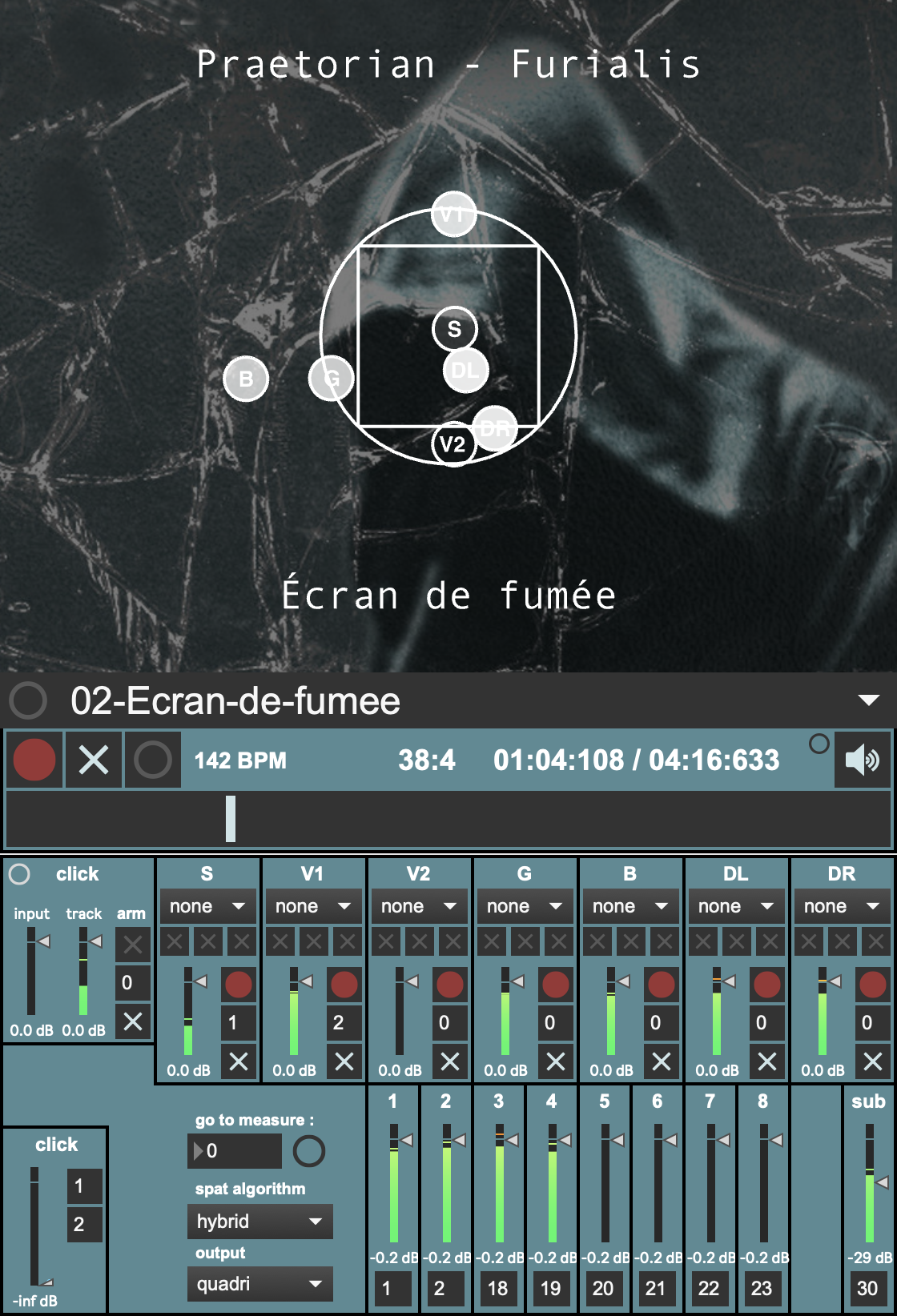

The shortest path to this result turned out to be the creation of a custom software entirely dedicated to the project with Max/MSP. The quickest way to write the spatialization trajectories was to capture them from a gestural control device (an old MIDIfied GameTrak controller actually) or directly on-screen with the mouse, one after another, or by subgroups. So I made this DAW-like interface and started working with the multitrack files from the studio recordings.

We went for the simplest diffusion system that we knew we could easily deploy in a variety of places : a horizontal quadriphony composed of a classic front stereo and another one in the back of the audience (the white square you can see in the software GUI represents the audience seen from above, with the speakers located at the corners and the stage over the upper side). Honestly, if we had the opportunity to restart the project from scratch, I would go for something closer to a cheap Wave Field Synthesis system with more frontal speakers, while keeping at least a pair behind, because the stage is a place that deserves a bit more than two speakers to get a good immersive result, and also because it turns out that too many surrounding trajectories feel weird to the audience while the physical sources (i.e. the musicians, except for the sample track) are pretty much static on stage. But well, we had to start somewhere ...

The spatialization engine allows to render the trajectories either on a circle of N evenly spaced loudspeakers, or in binaural or transaural stereo using IRCAM's Spat library. The algorithm used on the loudspeakers in studio and during the concert is experimental. I designed it, it is named SBAP (for Steer-Based Amplitude Panning) and still needs more testing.

We presented the project to the public during an experimental concert which took place on the 18th of October 2024 at L'inconnue (Talence).

We published a paper at the Journées d'Informatique Musicale 2025, describing the stage setup, the custom software, the underlying principles of the writing of spatialization trajectories, and the SBAP spatialization algorithm in detail.

A few months later I supervised an intern in his last year of Master's degree in Computer Science at the University of Bordeaux. His mission was to explore the relationships between the existing spatialized sources' trajectories, their musical content, and the global musical structures. The ultimate goal of this internship was to create a system that would learn from existing spatialized songs and propose new source spatializations for new multitrack songs. The task turned out to be difficult, partly because the data set was very small (only 9 songs from Praetorian's Furialis album), and also because there were so many descriptors and models to try out in a limited time span. The research subject is still of interest though, but would need to be conducted in synergy with more artists sharing the same taste for spatialization.

The project was showcased again during three consecutive concerts which took place on the 13th of December 2025 at the Data Party event in Bordeaux. This time we played the drum tracks from the album, as the drummer was injured and couldn't perform with the band.

PRAETORIAN @ DATAPARTY 2025 - © Annick Mersier